- 1. Register With Google Search Console

- 2. Check For Crawling and Indexing Errors

- 3. Check for Duplicate Versions of Your Site

- 4. Check the Use of Canonical Tags

- 5. Use the URL Inspection Tool To Check For Blocked Resources

- 6. Review Robots.txt File

- 7. Check Your Website On Mobile

- 8. Find And Fix Broken Links

- 9. Check Your Site Speed And Core Web Vitals Scores

- 10. Add And Test Structured Data

- 11. Create And Submit An XML Sitemap To Search Engines

- 12. Make Sure You're Using HTTPS

- 13. Use SEO Friendly URLs

- 14. Implement Ahreflang For Multilingual websites

- 15. Review Your Site Structure

- Learn More About Technical SEO

Technical SEO is the process of optimizing your website so that search engines can successfully crawl and index your content. It's usually the first step in the SEO process because any technical issues can negatively impact your website's ability to rank in Google and other search engines.

Use our technical SEO checklist to audit your website's technical infrastructure using best practices to ensure your website is free of critical SEO problems.

1. Register With Google Search Console

The first thing to do, if you haven't done this already, is to register your website with the Google Search Console. Among other features, the GSC will help you troubleshoot technical SEO errors.

The process is easy:

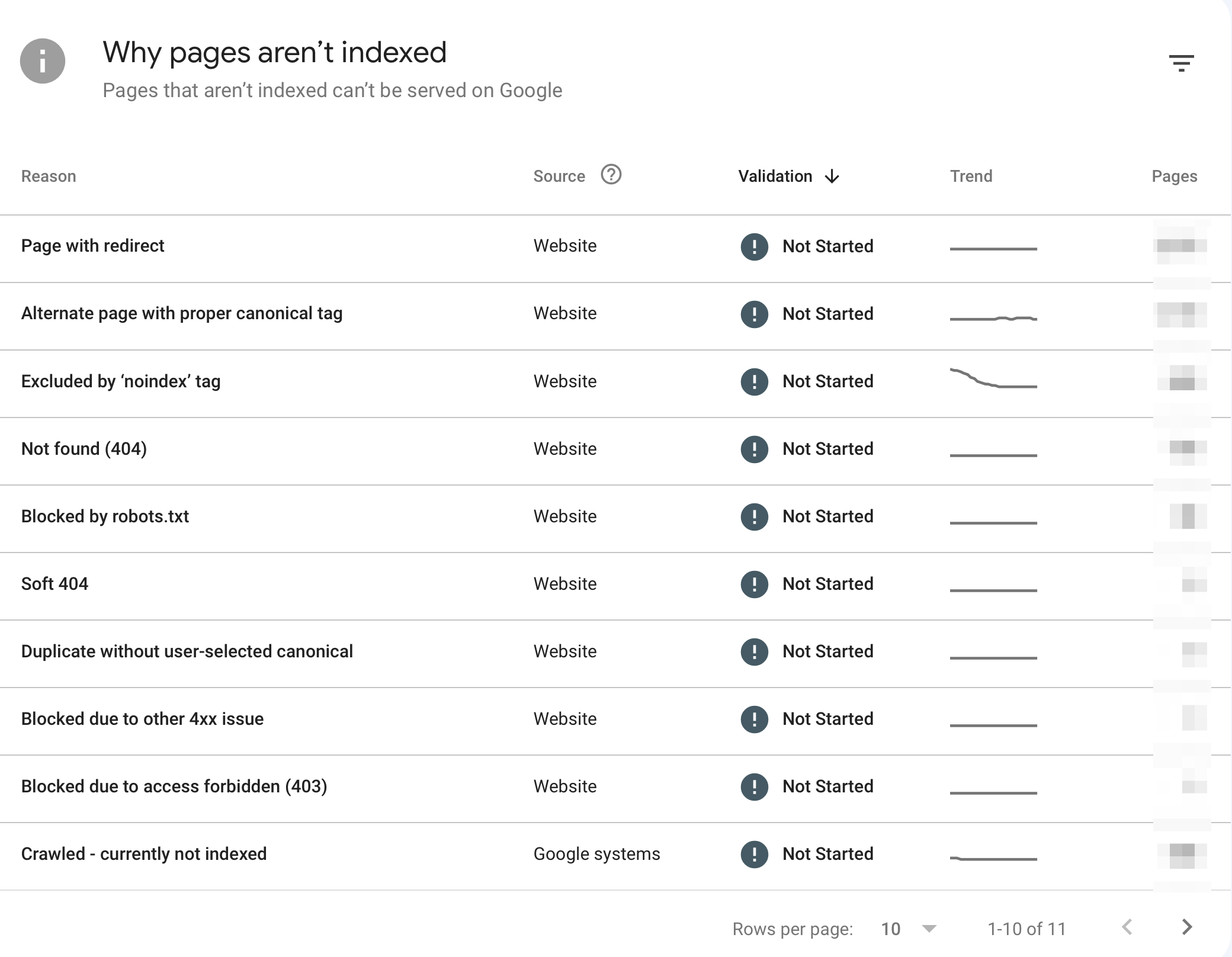

2. Check For Crawling and Indexing Errors

The next step is to check for any critical errors that may prevent search engines from crawling and indexing your pages. If you don't know the difference between the two and how they can affect your rankings, read our guide on how search engines work.

Login to GSC and click "Pages" under indexing to review the page indexing report. Your report should look like the screenshot below.

Google lists various reasons why pages or resources might not be indexed. Keep in mind that it's normal for a website to have unindexed pages. Google does not index all the content it crawls.

Nevertheless, you should review the following sections to ensure that you're not accidentally blocking Google bot from accessing your content or having any other serious issues:

- Excluded by ‘noindex’ tag - This error means a page has been intentionally marked not to be indexed by search engines. Check if this is the case for the listed pages.

- Not found (404) - This error indicates that the page does not exist, leading to a ‘Page Not Found’ error.

- Soft 404 - This occurs when a page displays a ‘Not Found’ message to users but returns a successful status to search engines.

- Blocked due to other 4xx issues - This error signifies that client errors prevent the page from being accessed or indexed.

- Blocked due to access forbidden (403) - This means the server refuses to allow Googlebot to access the page, likely due to permissions settings.

For all the above, click on the title to get a list of the affected pages and click on individual pages to find out why they are not indexed.

For more information, read our guides on:

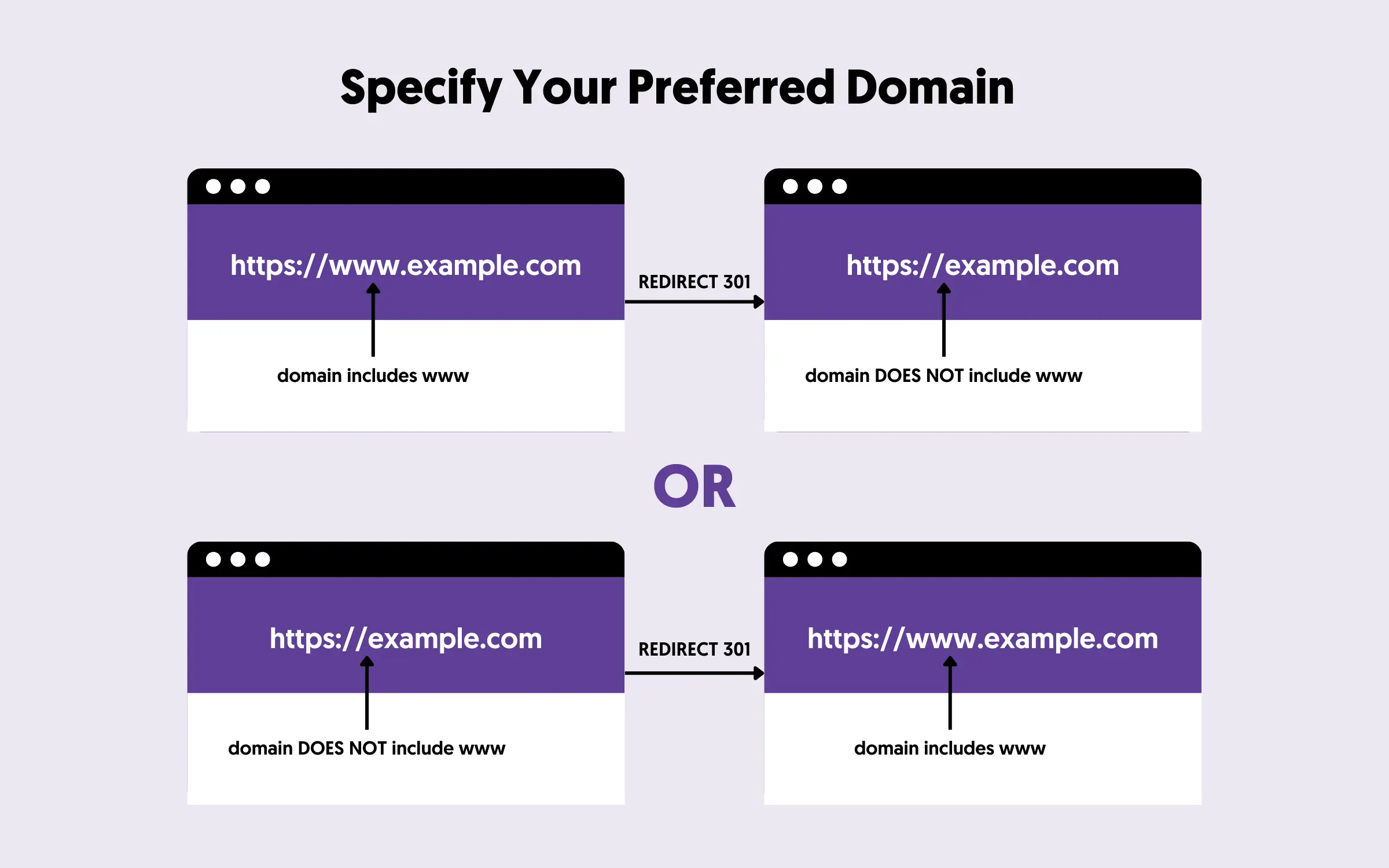

3. Check for Duplicate Versions of Your Site

The next is very easy to check but very important. A website with HTTPS installed, by default, is accessible using the following URLS:

- https://www.example.com

- https://example.com

- http://www.example.com

- http://example.com

While this is not so important for users, search engines consider these four different sites. What you should do to solve this technical issue is the following:

First, make sure that your non-https version (i.e., http://) redirects to your HTTPS version. Open a new browser window and type http://your-domain.com. It should redirect to https://your-domain.com. If that's not the case, read our guide on migrating your website to HTTPS.

Then, decide which version of your domain you want to keep, i.e., https://your-domain.com (without the www) or https://www.your-domain.com (with the www), and redirect the one version to the other using 301 redirects.

In terms of SEO, there is no benefit to choosing one version over the other, it's a matter of personal preference.

If you're using WordPress, you can change this setting in "Settings / General".

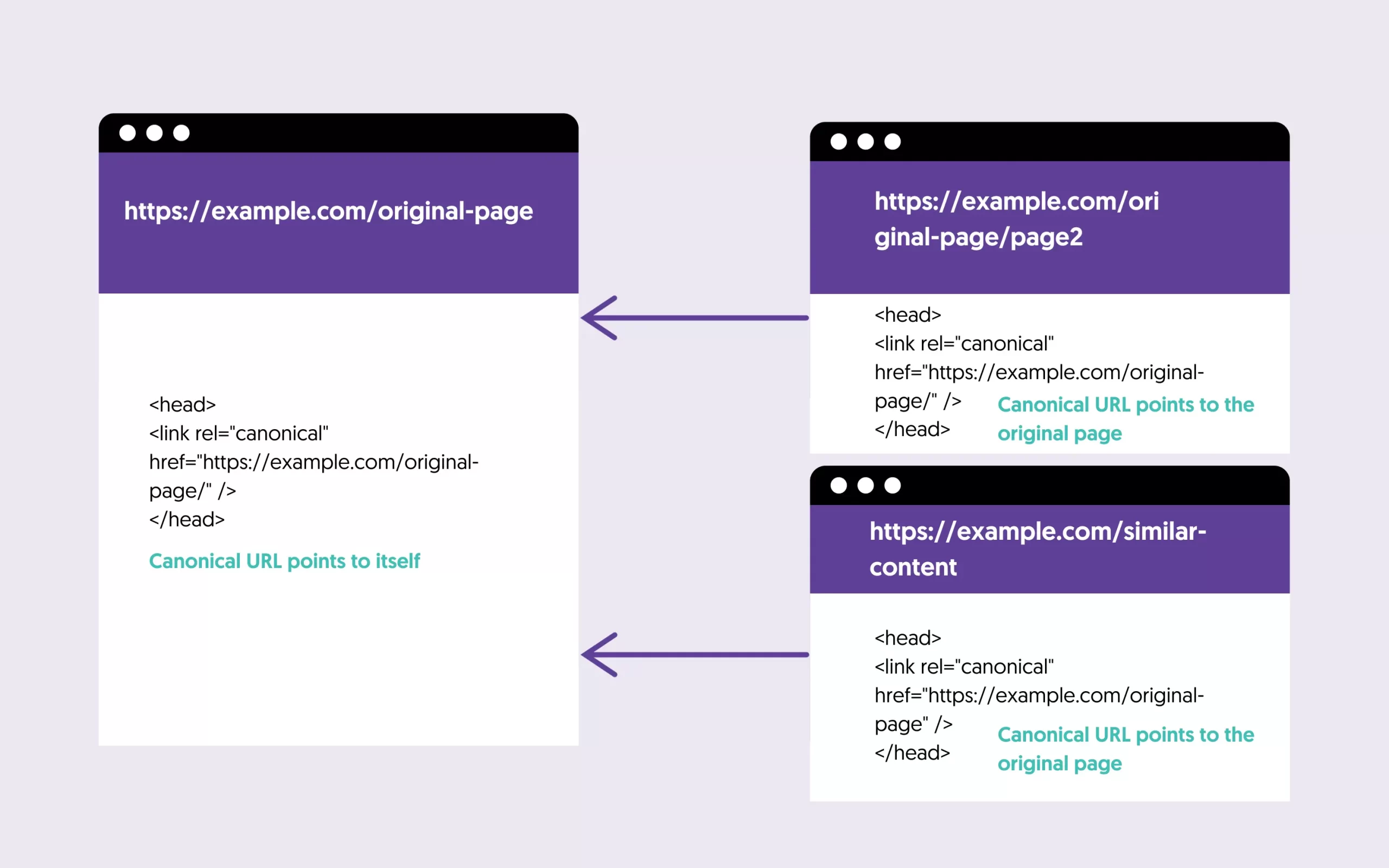

4. Check the Use of Canonical Tags

Another element you need to check is URL canonicalization. Canonicalization refers to specifying a main (canonical URL) for every page. This helps Google identify which version of a page to index in case of duplicate content.

This may sound like a complicated task, but to make it easier, follow these steps:

- Open any page on your website and view the source code.

- Search for the word "canonical" and notice the value enclosed in "<link rel="canonical"..."

- If the URL points to itself (see example below), everything is okay, and there is nothing else to do.

- If the URL points to another page, Google will index the canonical page and not this page. If this is valid, leave it as is; if not, consider changing the canonical URL.

- If there is no canonical URL defined, read our beginner's guide on Canonical URLs to get instructions on what you need to do.

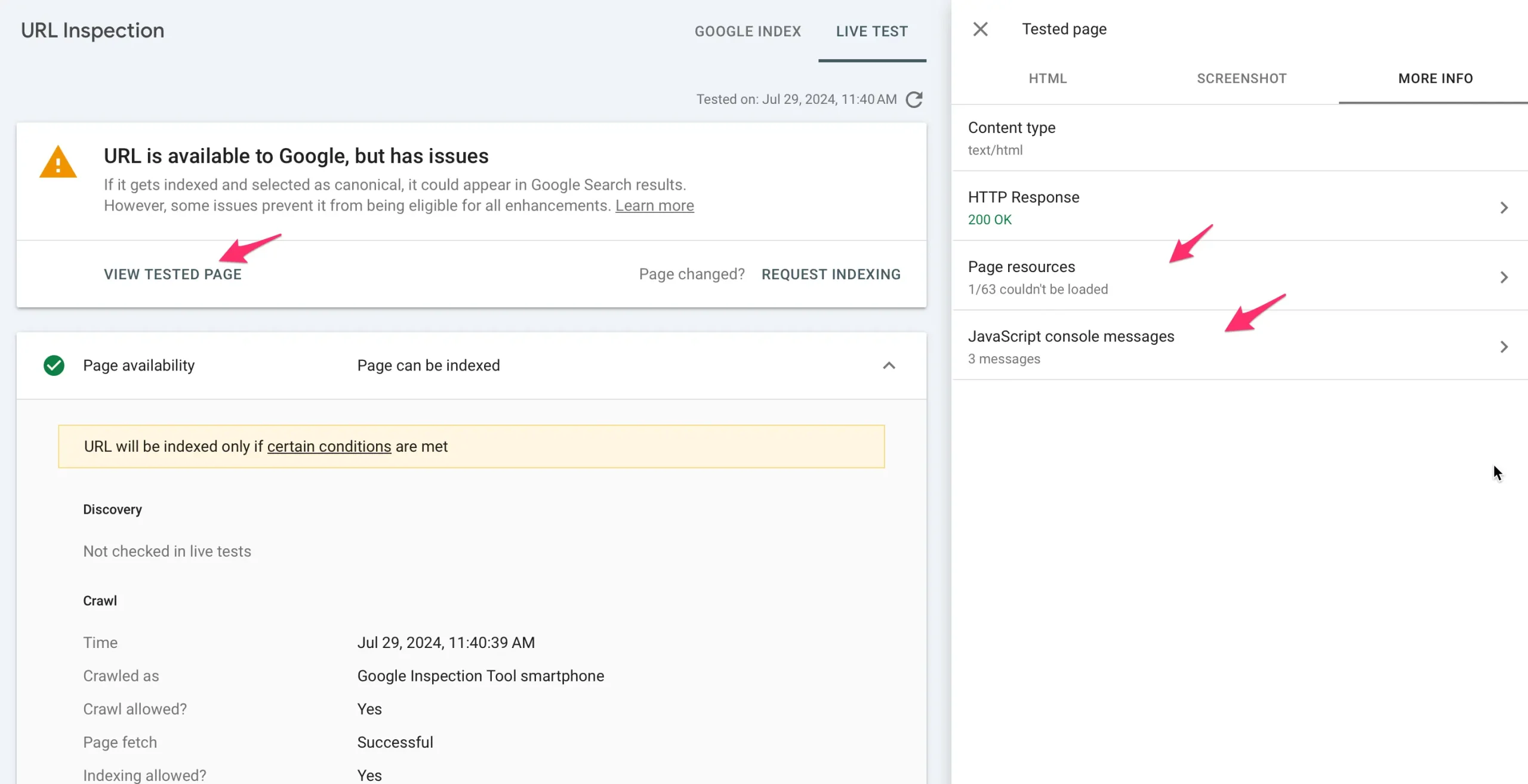

5. Use the URL Inspection Tool To Check For Blocked Resources

An important check you need to perform is whether Google can access all resources (images, CSS, Javascript) needed for your page to render correctly. You can do this using the URL Inspection tool of the Google Search Console.

Although the check should be done on all page types, you can start by checking your homepage.

- Log in to Google Search Console and enter your domain at the top search bar.

- Click the Test Live URL button and then the VIEW TESTED PAGE button.

- Click on the Screenshot tab and ensure that your page formatting is ok.

- Click the MORE INFO tab and look at the Page Resources and Javascript Console Messages. If you accidentally block any important resources, you should see them there.

Repeat the above process to check for blog posts, products, and other post types you may have on your website.

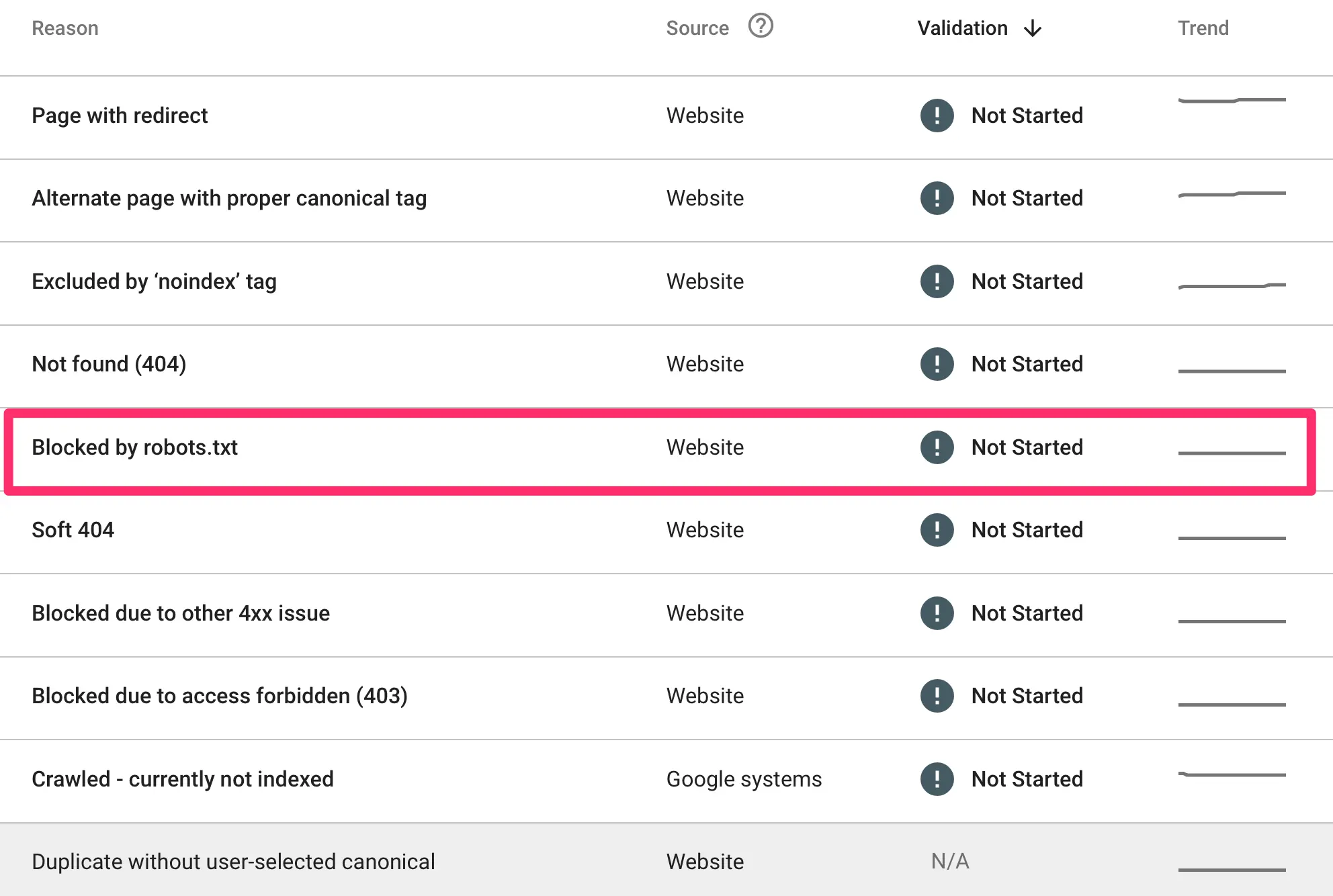

6. Review Robots.txt File

The robots.txt file can be used to instruct search engines which pages or parts of your website they should crawl or not. Any false blocking can keep important pages out of the index and thus negatively impact your rankings.

To view the file's contents, open a new browser window and navigate to https://www.yourdomain.com/robots.txt.

If you get a 404 error, it means you have no robots defined. You should follow the instructions in the guide below to create one.

Another way to check your robots is through Google Search Console.

- Log in to your account and go to Settings.

- Click the OPEN REPORT next to robots.txt.

- You should see several files (for each domain variation).

To find out which pages of your website are blocked by robots.txt, go to the "Page Indexing report" and look for the "blocked by robots.txt" section. Examine the listed pages to identify any false blockings.

For instructions on how to update and optimize your robots.txt, read: Robots.txt And SEO: Easy Guide For Beginners

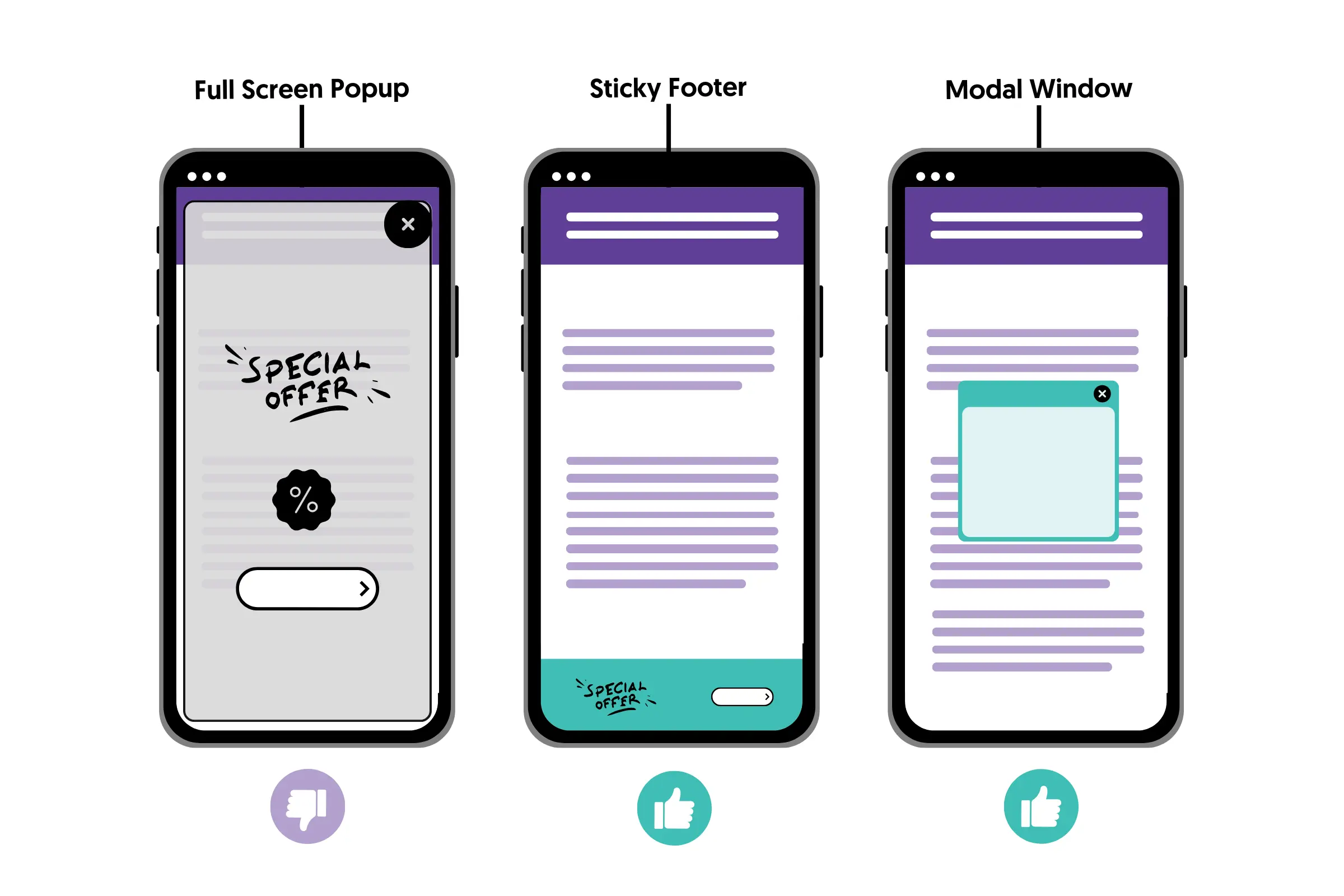

7. Check Your Website On Mobile

A mobile-friendly website is not just required for technical SEO; it is necessary to rank on Google. A few years ago, Google switched its crawling to mobile-first indexing, using a smartphone agent for crawling and indexing purposes.

Most users access the web on mobile, and you must provide them with a great experience. In practice, this means:

- Your mobile website should have the same content as your desktop. If content is missing from mobile, Google will not crawl or index it.

- Your website should display on mobile without horizontal scrolling.

- Your website should load fast on mobile (more on this below).

- You should not use full-screen popups or other intrusive interstitials.

- Your website's content and navigation should be optimized for mobile devices. Easy-to-read fonts, big buttons, small paragraphs, and high contrast help enhance the user experience.

You can get more tips on optimizing your website for mobile in our Mobile SEO Best Practices guide.

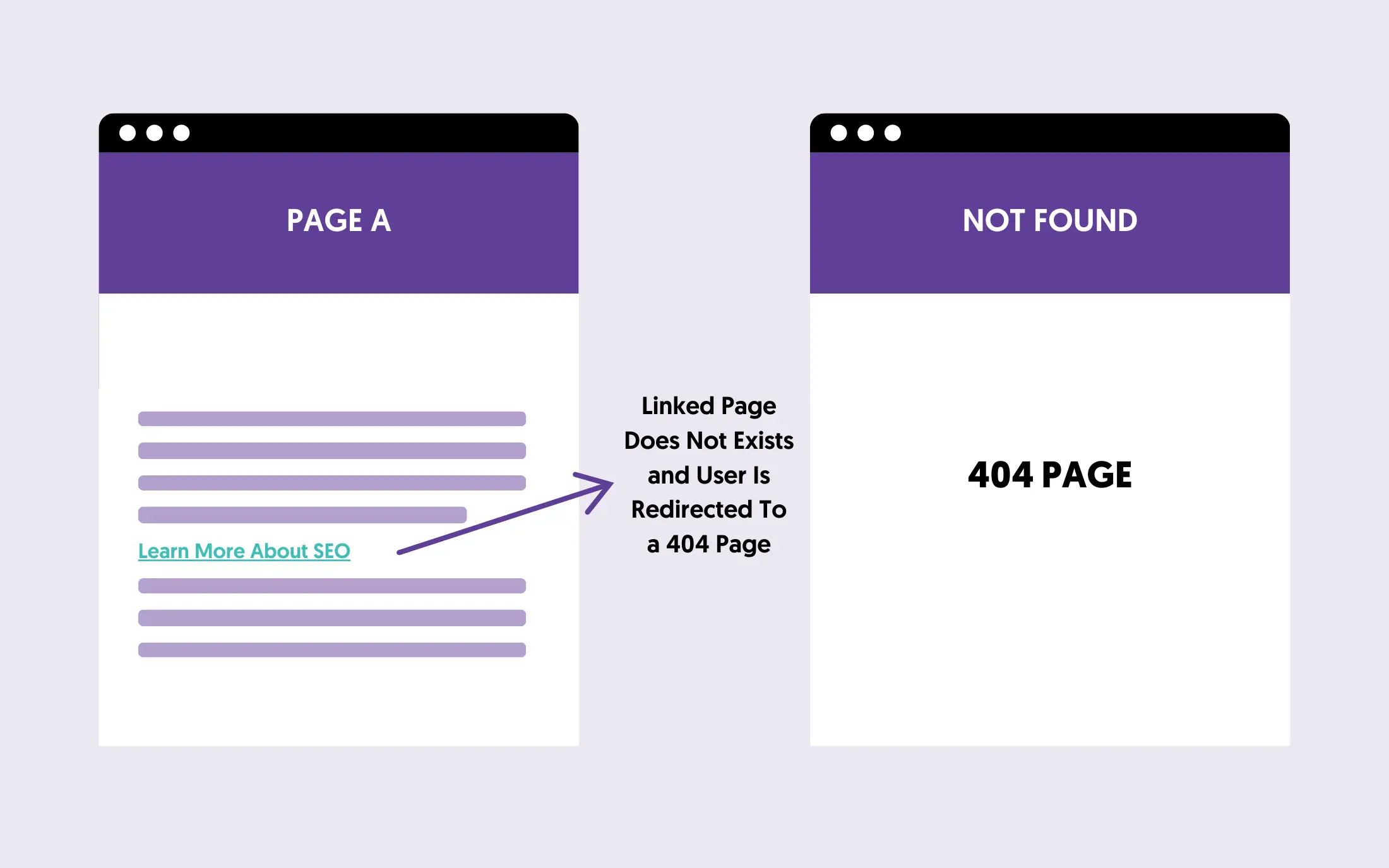

8. Find And Fix Broken Links

Broken links don’t directly affect your SEO rankings but are bad for the user experience. Having a couple of broken links is not a big issue, but too many links will increase your bounce rate and damage your domain's trust.

You should find and fix broken links, either within your website or links pointing to external websites.

You can use tools like Screaming Frog or Dead Link Checker to scan your website and find broken links.

It's easy and worth allocating the necessary time. For step-by-step instructions, read our guide on What Are Broken Links and How To Fix Them.

9. Check Your Site Speed And Core Web Vitals Scores

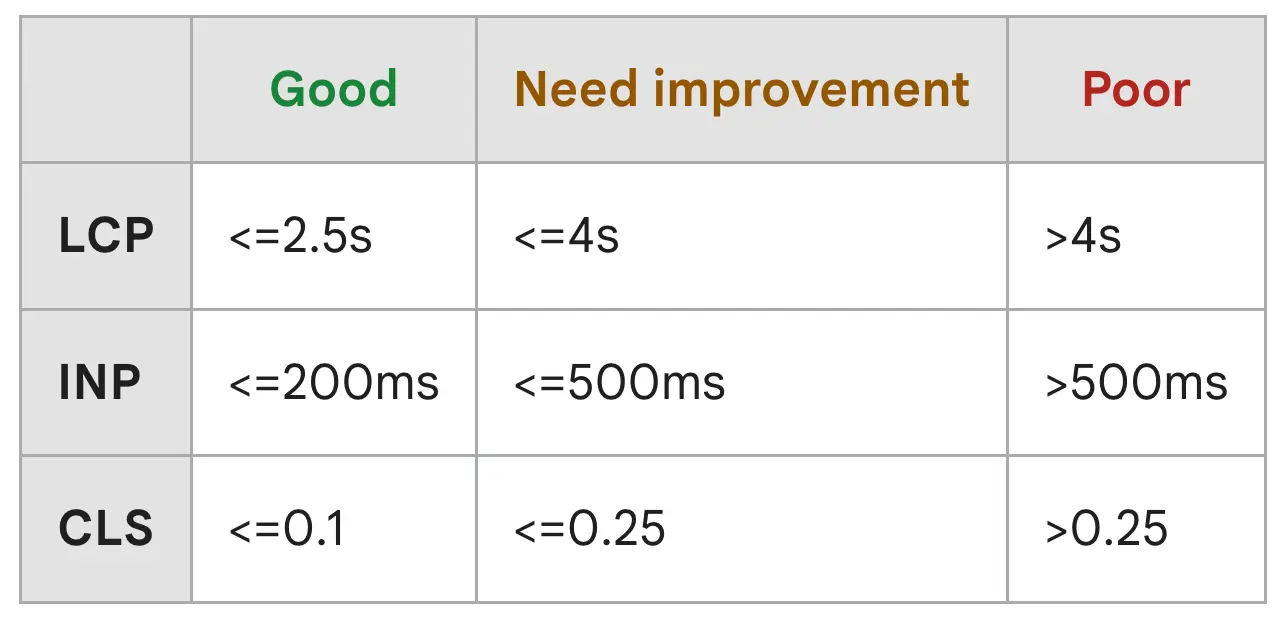

Core Web Vitals is a set of metrics that evaluate your website's performance on desktop and mobile. These are:

- LCP (Largest Contentful Paint): measures how fast your website loads. The ideal value is less than 2.5 seconds.

- INP (Interaction to Next Paint): measures the time it takes for your website to respond to user interactions. The ideal value is less or equal to 200 milliseconds.

- CLS (Cumulative Layout Shift): measures the visual stability of your website by tracking unexpected layout shifts. The ideal value is less or equal to 0.1.

You can check your score using the Core Web Vitals report of Google Search Console or running a PageSpeed Insights report.

To get actionable advice to improve your page loading speed, read our Page Speed Guide.

10. Add And Test Structured Data

Structured data is code you can add to your website to help search engines understand your content better. It can also help you be featured in rich snippets and other search features that use structured data.

Some people consider it part of on-page SEO and not technical SEO, but whichever the case, you should review and implement it to make your website SEO-friendly.

Here is an example of how structured data code looks like:

What you have to do is the following:

- Check here to find which schema markup applies to your website.

- Use ChatGPT to create the relevant code (or use a plugin).

- Use the Schema markup validator to check your code.

- Add the code to the <head> of your website.

- Use rich results tests to validate your implementation.

For more details, go through our Schema Markup Guide.

11. Create And Submit An XML Sitemap To Search Engines

An XML sitemap is a special file that lists all pages on your website that search engines should know about.

By creating and submitting an XML sitemap to search engines, you help them discover new content faster, and it's also a way to inform them about updates to existing content.

Here is what to do if you don't already have a sitemap:

- Check with your CMS on how to create an XML sitemap. Most CMSs have this functionality built into them.

- Include only the most important pages of your website.

- Ensure that your sitemap includes any images found on a page.

- Ensure that your sitemap includes the last modification date (LastMod).

- Find the URL of your XML sitemap. This is usually https://yourdomain.com/sitemap.xml.

- Go to Google Search Console and submit your Sitemap to Google.

- Go to Bing Webmaster tools and submit your sitemap to Bing.

- Regularly check the GSC Sitemap Report for errors.

For additional information, read our complete guide on XML sitemap optimization.

12. Make Sure You're Using HTTPS

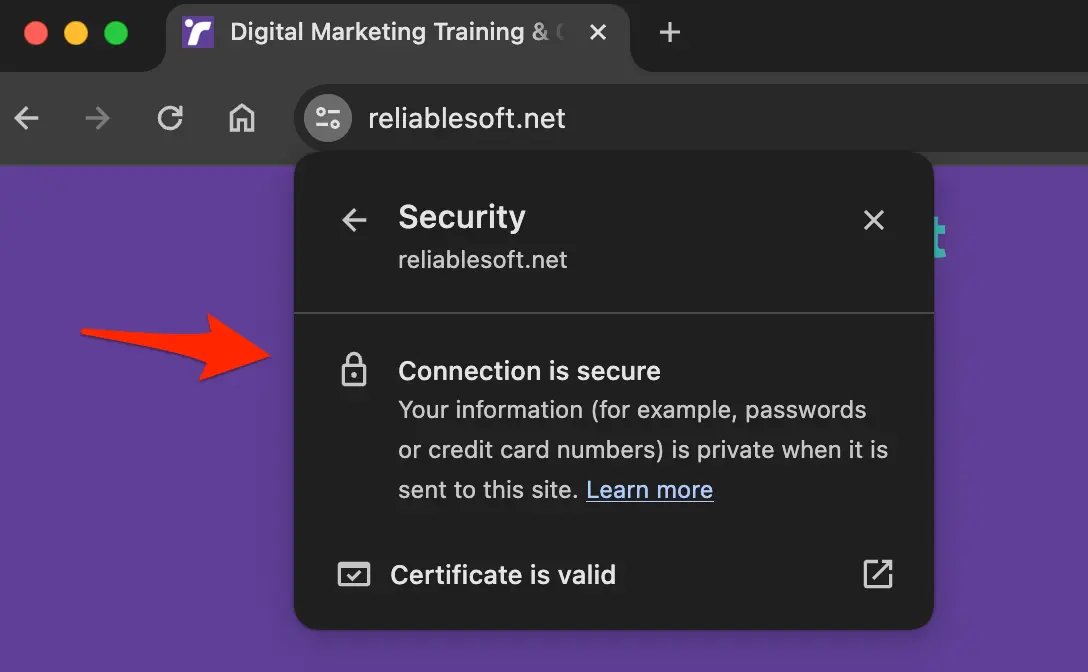

Another easy technical SEO check is ensuring your website is using HTTPS. HTTPS encrypts data between your users and your site, providing a secure browsing experience.

This helps protect users' data and is also good for your SEO, as HTTPS is a lightweight ranking signal.

To check if your site uses HTTPS, look for a padlock icon in the browser’s address bar or ensure your URLs start with "https://".

If not, contact your hosting provider to help install an SSL certificate from a trusted provider. Then, ensure you follow the best practices for migrating your website to HTTPS without losing SEO.

13. Use SEO Friendly URLs

Having an SEO-friendly URL structure is part of technical SEO and something that, although basic, is still important today. To achieve a search engine-friendly URL structure, you need to configure two things:

Your URLs - Ensure they are short, descriptive, and include relevant keywords. Avoid using special characters, numbers, or unnecessary words. For example, use "yourdomain.com/seo-tips/" instead of "yourdomain.com/p=12345".

To group similar content into directories - Organize your content into logical categories and subdirectories. This helps both users and search engines understand the structure of your site.

For example, use "yourdomain.com/blog/seo" to group all SEO-related posts or "yourdomain.com/shoes/mens/athletic" to group related products together.

To learn more, read:

14. Implement Ahreflang For Multilingual websites

Implementing hreflang tags is important for multilingual websites to ensure search engines show users the correct language or regional URL.

Hreflang tags can be used to avoid duplicate content issues, improve user experience, and help search engines better understand your website's content.

To implement hreflang, you need to add code to your page's HTML, specifying the language of the page in a specific format.

Here is an example of how these tags work:

Use <link rel="alternate" hreflang="en" href="https://example.com/en/"> for English and <link rel="alternate" hreflang="fr" href="https://example.com/fr/"> for French.

You only have to do this if your website is available in multiple languages.

Read this guide for more information.

15. Review Your Site Structure

Regardless of the website type, your site structure should be easy for users and search engines to understand. When reviewing your structure, consider the following tips:

- Use a Hierarchical Website Structure - Ensure each page is accessible from your homepage in less than 3 clicks.

- Group Related Content Into Categories - as shown in the example below.

- Use HTML and CSS For Navigation - don't use images for your navigation, but standard HTML tags.

- Use breadcrumbs with relevant schema markup.

- Use internal linking to "join" related pages together.

- Provide a user sitemap (in addition to an XML sitemap).

Here is a visual representation of a good site structure from the SEO Starter Guide.

If you have an established website, you should be careful when changing its structure. Even if you add 301 redirections, your rankings may still be impacted, so make structural changes only when necessary.

Make sure you read our guide on How to build your website structure for SEO for all the fine details.

Learn More About Technical SEO

To learn more about technical SEO and become an expert, continue learning with the following guides:

- Best Technical SEO Courses (Free & Paid)

- Technical SEO Course By Reliablesoft (part of SEO Course).

- Technical SEO Articles.