- How To Disallow Specific Pages In Robots.txt

- How To Block a Domain In Robots.txt

- How To Allow Access To All Crawlers And Content

- How To Disallow Access To Specific Folders

- How To Disallow Specific Bots

- How To Manually Overwrite The robots.txt File In WordPress

- How To Prevent Crawling of URL Parameters

- How To Restrict Image Indexing

- How To Block AI Crawlers From Accessing Your Content

- How To Prevent Content From Appearing In Search Results Using NoIndex Tag

- Learn More About Robots.txt And SEO

Robots.txt is a file located in your website's root folder. It lets you control which pages or parts of your website search engines can crawl.

When search engine bots visit your website, they check the contents of robots.txt to see if they can crawl the site and which pages or folders to avoid. One common use case of robots.txt is to prevent specific pages from appearing in searches, but as we'll see below, this is not always the best option.

How To Disallow Specific Pages In Robots.txt

To prevent search engines from crawling specific pages, you can use the disallow command in robots.txt.

For example, if you want to block search engines from indexing a "thank you" page that users land on after submitting a form, you would add the following line to your robots.txt file:

User-agent: *

Disallow: /thank-you.html

This tells all search engines (using User-agent: *) not to crawl or index the "/thank-you.html" page.

Ensure the path you enter matches the exact URL structure of the page you want to disallow.

If the URL of your "thank you" page is https://www.example.com/thank-you.html, you should only enter "/thank-you.html".

If the URL of your "thank you" page is https://www.example.com/checkout/thank-you.html, you should enter "/checkout/thank-you.html" in your robots.txt.

You can also use disallow to block multiple pages from Google like this:

User-agent: *

Disallow: /thank-you.html

Disallow: /some-other-page

And even pages that have a specific extension. For example, to block all .HTML pages use:

User-agent: *

Disallow: /*.html$

You can also disallow specific bots only from accessing a page. For example, to block Google from crawling a page but allow all others, you can use this:

User-agent: Googlebot

Disallow: /thank-you.html

This tells Google’s bot (Googlebot) not to crawl the "thank-you.html" page while allowing other search engines to access it. This is useful if you want to restrict Google’s access to certain content while making it available to other search engines like Bing or Yahoo.

How To Block a Domain In Robots.txt

To block a domain in robots.txt, you can prevent all search engines from crawling any part of your website using the "Disallow: / "command. This instructs ALL search engines not to index any pages on your site.

This is also referred to as the "disallow all" command.

User-agent: *

Disallow: /

Using this command will stop your site from appearing in search results. Valid use cases of this include:

- Websites that are still under development, and you don't want search engines to crawl them yet.

- Testing or staging environments you want to keep out of the index.

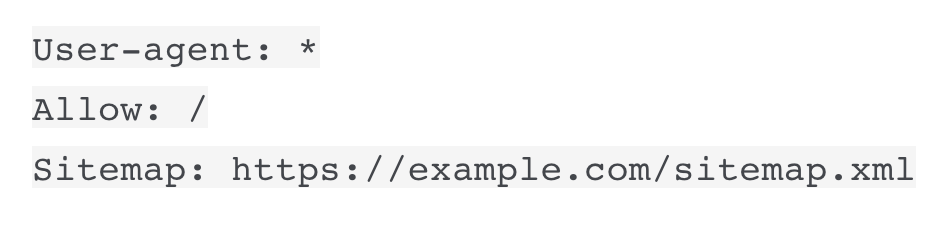

How To Allow Access To All Crawlers And Content

To unblock your website and give access to all crawlers to all your website content, leave the disallow command empty as shown below.

This is also known as "allow all".

User-agent: *

Disallow:

When the Disallow: line is left empty, it tells all search engine bots that they are free to crawl every page on your site. This is the default behavior if search engines cannot locate a robots.txt file in your website's root folder.

How To Disallow Access To Specific Folders

To disallow access to specific folders on your website, you can specify those folders in your robots.txt file using the Disallow command. This prevents search engines from crawling and indexing any content within those directories.

For example, if you want to block search engines from accessing a folder called “private” and another folder named “tmp,” your robots.txt file would look like this:

User-agent: *

Disallow: /private/

Disallow: /tmp/

In this example, the trailing slash after the folder name (/private/ and /tmp/) indicates that everything within those folders should not be crawled.

How To Disallow Specific Bots

To disallow specific bots from crawling your site, you can target them individually in your robots.txt file by specifying their user agents. This allows you to block access to your site for certain bots while still allowing others to crawl it.

For example, if you want to block “Bingbot” but allow all other bots, your robots.txt file would look like this:

User-agent: BingBot

Disallow: /

User-agent: *

Disallow:

In this example above, Bingbot is blocked from accessing any part of your site, as indicated by Disallow: /.

The User-agent: * followed by an empty Disallow: line allows all other bots to crawl your entire site.

When blocking specific bots, ensure that the bot name specified in User-Agent is correct. You can find a list of all known bots here.

How To Manually Overwrite The robots.txt File In WordPress

WordPress, by default, uses a virtual robots.txt file that is generated dynamically based on your WordPress settings and plugins. This file is updated as you make changes in your WordPress dashboard. Since it's a virtual file, you cannot access its contents through a file manager.

To gain more control over your site's crawling, it is recommended that you manually overwrite the virtual file by uploading a robots.txt file to your website's root folder. This way, you can use all robots.txt commands to tailor your site's crawling settings to your specific needs.

Follow these steps:

- Access your site via FTP or File Manager - Use an FTP client like FileZilla or the File Manager tool in your hosting control panel (e.g., cPanel) to access your website’s files.

- Navigate to the root directory - This is usually named public_html, www, or it might simply be the main directory of your domain. This is where the robots.txt file should reside.

- Create a file named robots.txt - Use a text editor like Notepad to create the file and enter your desired rules.

- Upload and verify your file - Save your changes and upload the robots.txt file to your site's root directory via FTP or File Manager. After uploading, check that the file is accessible by visiting https://yourdomain.com/robots.txt in your browser.

- Submit robots.txt to search engines - You actually don't have to submit your robots.txt to search engines. They will discover it automatically. Wait a few days and then check the robots.txt report in your Google Search Console. You can find the report under SETTINGS.

A good example of a robots.txt for most WordPress websites is:

It allows crawlers to access all content and also specifies the location of the XML sitemap file.

How To Prevent Crawling of URL Parameters

If your site uses URL parameters (like ?id=123 or ?sort=asc), search engines might crawl multiple versions of the same page, leading to duplicate content issues. To prevent this, you can block search engines from crawling URLs with specific parameters:

User-agent: *

Disallow: /*?id=

This rule tells bots not to crawl any URL that contains ?id= in the query string, helping you optimize your crawl budget and ensure that only important pages are indexed.

How To Restrict Image Indexing

If you want to prevent images on your site from appearing in Google Image Search, you can block image files from being crawled.

Assuming your images are in the "/images/" folder residing in your website's root directory, you can use the following command:

User-agent:Googlebot-Image

Disallow: /images/

How To Block AI Crawlers From Accessing Your Content

With the rise of AI tools such as ChatGPT, Claude, and others, you might want to prevent these bots from accessing your site. You can block known AI crawlers by specifying their user agents in your robots.txt file.

For example, to block OpenAI’s GPTBot, you would add the following lines:

User-agent: GPTBot

Disallow: /

This tells GPTBot that it is not allowed to crawl or index any part of your site. You can similarly block other AI crawlers by identifying their user agents and adding them to your robots.txt file.

For a complete list of all AI bots, visit this page.

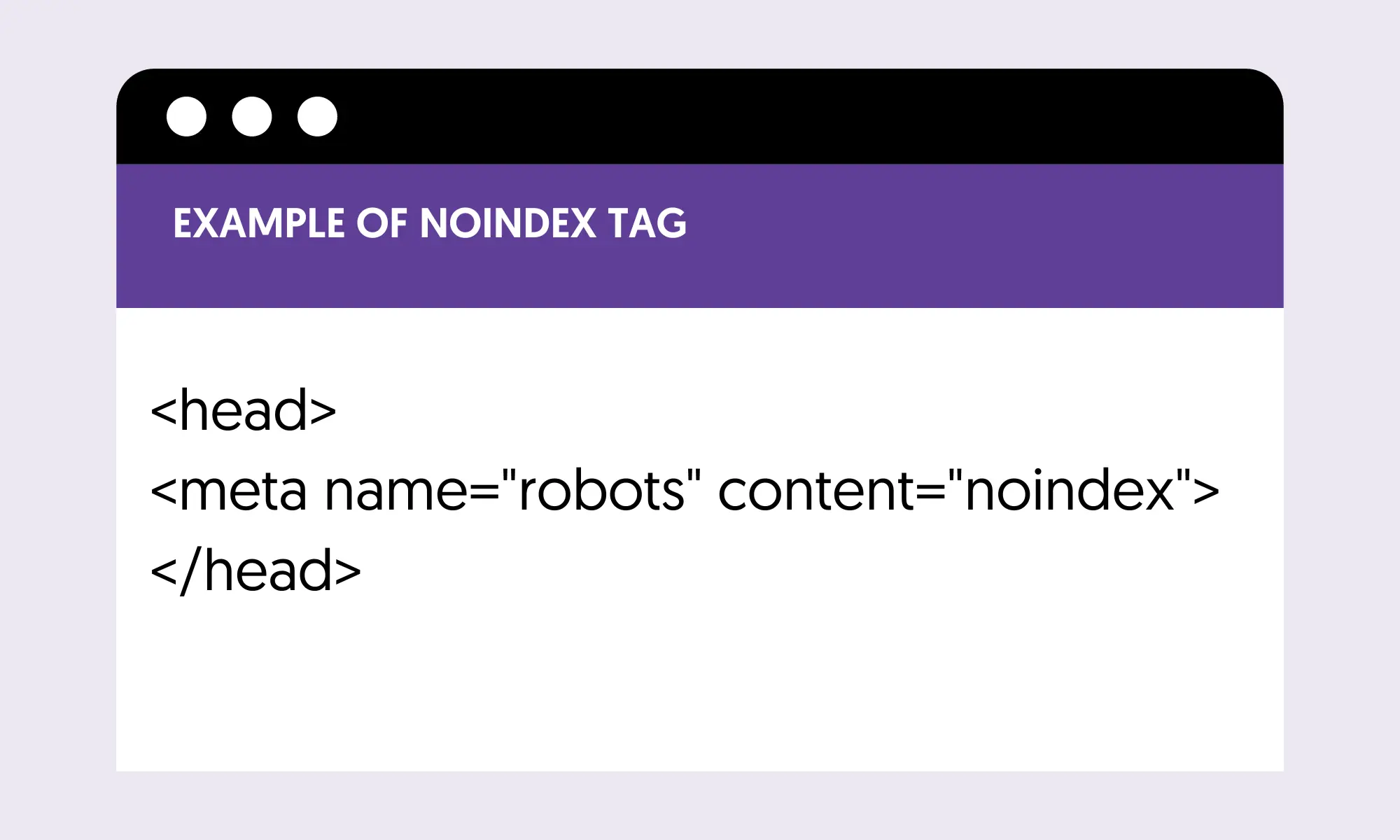

How To Prevent Content From Appearing In Search Results Using NoIndex Tag

As mentioned above, adding directives in robots.txt cannot guarantee that a page will not show up in the search results. This might prevent search engines from crawling your content, but if a page has a link from another site, it will be crawled and indexed.

To keep content out of the search results, you must use the noindex tag.

When you use the noindex tag, you tell search engines that you don’t want a specific page included in their index. Thus, it won't appear in search results or count as content when evaluating your website's authority.

The noindex tag is placed in the HTML of the page within the <HEAD> section, like the example below.

Make sure not to block a page in robots.txt if you’ve added a “noindex” tag, as search engines need to crawl the page to see and process the tag.

Learn More About Robots.txt And SEO

Use the following guides and resources to learn more about Robots.txt and SEO.

- Robots.txt And SEO: Easy Guide For Beginners

- Technical SEO Best Practices

- Best Technical SEO Courses (include lessons on Robots.txt)

- Introduction to Robots.txt (Google Guidelines)