What Is a Website Conversion?

A website conversion is when a user performs an important action on your website, such as subscribing to a newsletter, filling out a contact form, or purchasing. A conversion rate is the percentage of people who visited your website and performed an important action.

Some common examples of website conversions include:

Ecommerce Conversions:

- Making a purchase

- Adding a product to a cart

- Signing up for membership or subscription services

Lead Generation:

- Filling out a contact form

- Downloading a white paper or ebook

- Signing up for a webinar or event

General Conversions:

- Signing up for a newsletter

- Completing a survey

- Engaging with a chatbot for assistance

- Visiting a key page

- Clicking a specific button

Remember that website conversions can vary widely depending on the nature of the business and the actions it values most. You should analyze your business and define which website actions you want users to take. These will be your conversions.

What Is a Good Website Conversion Rate?

As a rule of thumb, a conversion rate over 2% is considered good, but this varies per industry, type of website, and conversion type. For example, 2% is a low conversion rate for email signups, but it's satisfactory for an ecommerce website selling expensive products.

To help you figure out if your conversion rate is good, you should do two things:

1. Monitor your conversion rate and try to improve it over time. Use the instructions below to determine your conversion rate for different actions and craft a plan for increasing it over time.

It's not easy and won't happen overnight, but without a monitoring system, you won't be able to measure your progress or lack thereof.

2. Compare your conversion rates with industry statistics. Published statistics can show how your conversion rate compares with other websites in your industry.

For example, this report from Statista shows the average conversion rate for Ecommerce websites in different verticals.

The table below shows the industries with the highest conversion rates.

| Industry | Conversion Rate |

|---|---|

| Food & Beverage | 3.7% |

| Beauty & Skincare | 3.3% |

| General Apparel | 2.6% |

| Home Decor | 2.4% |

| General Footwear | 2.4% |

The table below shows the industries with the lowest conversion rates.

| Industry | Conversion Rate |

|---|---|

| Home Appliances | 1.6% |

| Handbags & Luggage | 1.2% |

| Luxury Apparel | 0.9% |

| Home Furniture | 0.8% |

| Luxury Handbags | 0.4% |

How Do You Calculate the Conversion Rate?

The formula to calculate your conversion rate is simple: (Number of conversions / Total number of visitors) x 100

For example, if you get 1000 website visits and 20 of them buy a product from your store, your conversion rate is (20 / 1000) X 100 = 2%.

When calculating your conversion rate, it is important to track conversions correctly. This means clearly defining what counts as a conversion on your site—whether a sale, a sign-up, or a download—and ensuring that these actions are tracked accurately through your analytics tools.

For instance, if you run an online academy, conversions might include the number of users who sign up for a trial or purchase a course. Here, you should track different events, such as visiting the thank you page after a user signs up for a trial and the payment confirmation page after purchasing a course.

To make your calculations meaningful, you should calculate the conversion rate of visitors who ended up signing up for a course and the conversion rate of users who signed up for a trial and purchased. For these complicated calculations, you may need the help of a developer to add the necessary event tracking code on your website so that you can use it in reports.

7 Ways To Improve Website Conversions

- Align Content with Search Intent

- Improve Page Experience Factors

- Analyze User Behaviour

- Make It Easy to Convert

- Run Targeted A/B Tests

- Add Live Chat Support

- Add Trust Signals

1. Align Content with Search Intent

When optimizing your website for conversions, you should understand one thing. The number one factor that will lead to more conversions is the content of a page.

If the content (and that includes products or anything else you're selling) matches the search intent, you'll have conversions. If users don't find what they are looking for or are not happy with your offering (type of product, price, etc.), you won't make any conversions, no matter how many A/B tests you do or how you format your CTA buttons.

So, before continuing further, take a closer look at your landing pages and ensure that:

- It is clear what you're offering. If it's a sales page, consider your unique selling proposition (USP). Why should users buy your product?

- If it's a newsletter sign-up page, tell users the benefits of registering for your newsletter.

- Analyze your competitors and make your offerings more attractive to users.

- Use keywords that users can recognize in your page titles, headings, and content. This will make your copy more relevant to the user's intent and good for SEO.

2. Improve Page Experience Factors

Another element that impacts the number of conversions is page experience factors. Page experience factors include four things:

Website speed - Users don't like slow websites, and website speed is a factor that affects conversions. When optimizing your website for conversions, focus on Core Web Vitals, which are specific factors that Google considers important in a webpage's overall user experience. Core Web Vitals are made up of three specific page speed and user interaction measurements:

- Largest Contentful Paint (LCP) - measures loading performance - aim for a value of 2.5 seconds.

- Interaction To Next Paint (INP) - measures responsiveness. aim for a value of less than 200 milliseconds.

- Cumulative Layout Shift (CLS) - measures visual stability - aim for a CLS score of less than 0.1.

Use Google PageSpeed Insights to measure your current performance and get recommendations on improving it. Optimizing your images, leveraging browser caching, and minimizing JavaScript can all help reduce the time your website takes to load.

Website security - Ensure that your website uses HTTPS, which secures users' connections to it. Implementing SSL certificates protects your site's data and reassures visitors that it is trustworthy, which can increase conversion rates.

Mobile-friendliness - Your website should look and function well on devices of all sizes. Mobile-friendliness is not just about scaling down sizes but also about rethinking the layout and interaction to fit a smaller screen.

UX (User Experience) - Provide a clear and intuitive user interface that guides users through your website. This includes logical navigation, readable fonts, and a clear path to conversion with minimal distractions.

3. Analyze User Behaviour

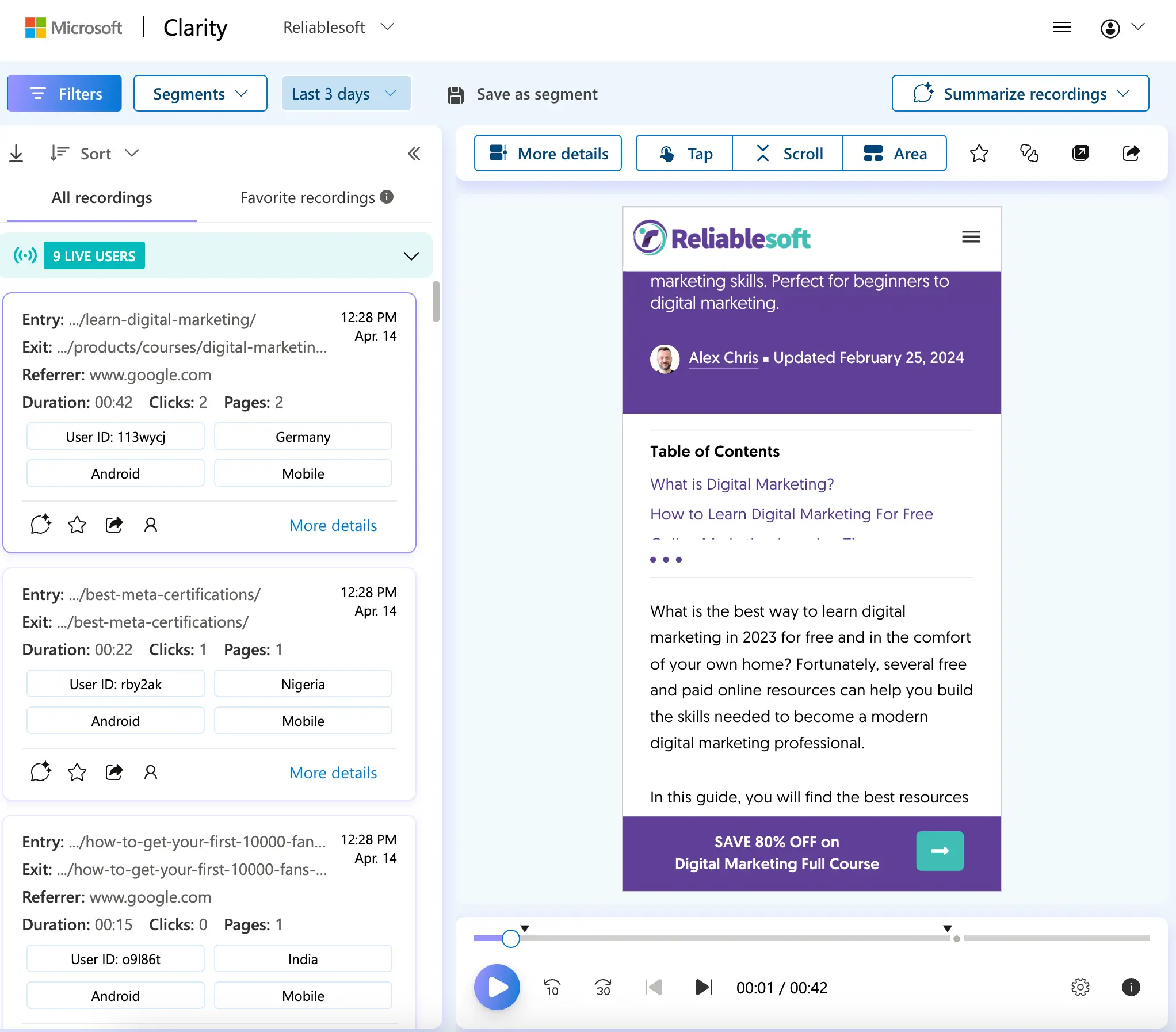

A heatmap and session recording tool is one of the most effective ways to understand how users interact with your website. I'm using Microsoft Clarity, a free and easy-to-use tool. All you have to do is add a script to your website, and then you can view heatmaps and monitor user sessions.

Analyzing the reports can give you invaluable insights into where users are clicking, how far they scroll, and where they spend the most time on your pages. This analysis lets you optimize your website layout and button placements to aid conversions.

Moreover, session recordings can highlight issues that aren't always apparent, such as unresponsive buttons or difficult navigation on mobile devices. Addressing these issues enhances user experience and naturally leads to improved conversion rates.

4. Make It Easy to Convert

Once you get users to perform an important action on your website, make it easy for them to complete the process without distractions. Consider these tips and examples:

Simplify Submission Forms: Reduce the number of fields in your forms to the bare essentials. For example, a name and email address are sufficient to capture leads. Don't ask for more information than you need because it's nice to have. You can collect additional information later as you engage with your customers with valuable content.

Clear Call-to-Action (CTA): Make your CTAs stand out with contrasting colors and clear language. Ensure they are easy to find and understand. For instance, instead of "Submit," use "Get Your Free Ebook" to be more specific about what the user will receive. Instead of "Purchase", use "$149 - Get Course Now".

Streamline the Checkout Process: Simplify the checkout process to a few clear steps. Remove any unnecessary steps and ensure each part of the process is clear. For example, include a progress bar to show users exactly where they are in the process and what’s left to complete.

Offer Multiple Payment Options: The minimum options for a good checkout experience are PayPal, Credit Card, Google Pay, and Apple Pay.

Allow a Guest Checkout: Include a guest checkout option to avoid forcing users to create an account. You can automatically create an account for them after they complete the purchase.

5. Run Targeted A/B Tests

Optimizing your website for conversions involves conducting many A/B tests, but they must be controlled and targeted to be meaningful. This means testing one element at a time and accurately measuring the results.

For example, to test different versions of your call-to-action buttons, you should first add an event tracking code to capture clicks. Then, you should calculate the button conversion rate, i.e. (number of button clicks/number of page visits) X 100.

Once you have the baseline data, you can change the button text for some time (a couple of weeks, depending on your traffic) and compare the results.

Another example could be testing a different layout for your product pages. Start by analyzing the user behavior of your current design. Use Microsoft Clarify heatmap reports to track where users click and how far they scroll.

Next, create a second page where you rearrange elements such as product descriptions, images, and reviews.

Publish the new page and, using internal links (or dedicated software), send 50% of your traffic to it and 50% to your existing page.

After a reasonable period, review the heatmap report, conversion rates, time spent on the page, and other metrics to pick a winner.

6. Add Live Chat Support

Adding a live chat support button on your sales page can help you increase conversions in many ways. We have tested this technique multiple times with several clients and have always produced better results.

The first obvious way is that it gives potential customers an instant way to get answers to their questions. Customers browsing your products or services may have questions and need someone to talk to before making a final decision. You may already have the answers on the page, but not all users will read the content.

The second way live chat helps conversions is by reassuring users they can get support when needed. Even if most users will not use the live chat, its presence generates trust in your brand, which positively impacts conversions.

If your website doesn't have a live chat button, read our live chat reviews for the best options. Most solutions offer a 14-day trial, which is enough time to decide if installing a live chat button will lead to more conversions. Make sure to compare your conversion rate before and after the live chat to make an informed decision.

7. Add Trust Signals

Like a live chat button, trust signals are other elements that help build trust between users and your brand. Here are some examples:

- Display security badges, especially on checkout pages, to reassure users that their transactions are secure.

- Customer testimonials and reviews (posted on third-party websites) can also be crucial in demonstrating real-life satisfaction with your products or services.

- Industry awards or certifications that highlight your expertise and reliability. For example, we are a Google Partner and display that on all our pages to highlight our credibility and expertise.

- An up-to-date, professional website design acts as a trust signal, suggesting a legitimate and serious business.

Depending on your industry, business, and products, you should consider other elements to help users feel confident that you're a legitimate business. The Internet is full of scammers, and users visiting your website for the first time need this reassurance to convert.